Maple provides a collection of commands for numerically solving optimization problems, which involve finding the minimum or maximum of an objective function, possibly subject to constraints. The Optimization package enables you to solve linear programs (LPs), quadratic programs (QPs), nonlinear programs (NLPs), and both linear and nonlinear least-squares problems. Both constrained and unconstrained problems are accepted, and the package accepts a wide range of input formats.

You can also use Maple to perform global optimization. For more details, see Maple Global Optimization Toolbox.

Maple

Powerful math software that is easy to use

• Maple for Academic • Maple for Students • Maple Learn • Maple Calculator App • Maple for Industry and Government • Maple Flow • Maple for Individuals

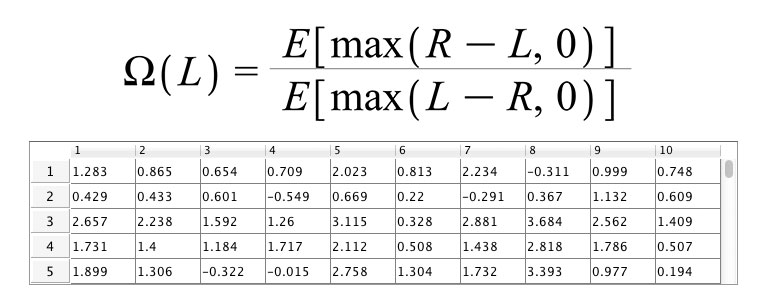

![Var(Sum(`*`(x[i], `*`(R[i])), i = 1 .. n))](gif/optimization_examples%5b1%5d_43.gif) , which can be shown to be equal to

, which can be shown to be equal to